|

Where to?

Canada

Kicks Ass! This site is

hosted by Solarbotics.net

|

His contest had already been running for some time(I was at summer camp getting my pilot's license when it started), but I decided to enter it anyway. What I've got here goes a little beyond the contest, but it does demonstrate the soundness of the conecpts. My robot started out just emulating his learning circuit but has since evolved into a more complex machine. It is, however, still based on Bruce's learning circuit.

| Controller: | HVW Tech Stamp Stack 2 (Basic Stamp 2) |

| Motors: | Modified servos from Parallax (Futaba) |

| Chassis: | Boe Bot chassis |

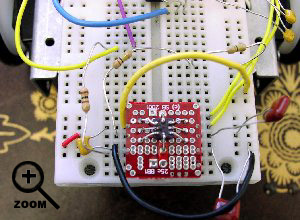

| Sensors: | Analog Devices ADXL 202e accelerometer |

|

'{$STAMP BS2} |

|

Program Description

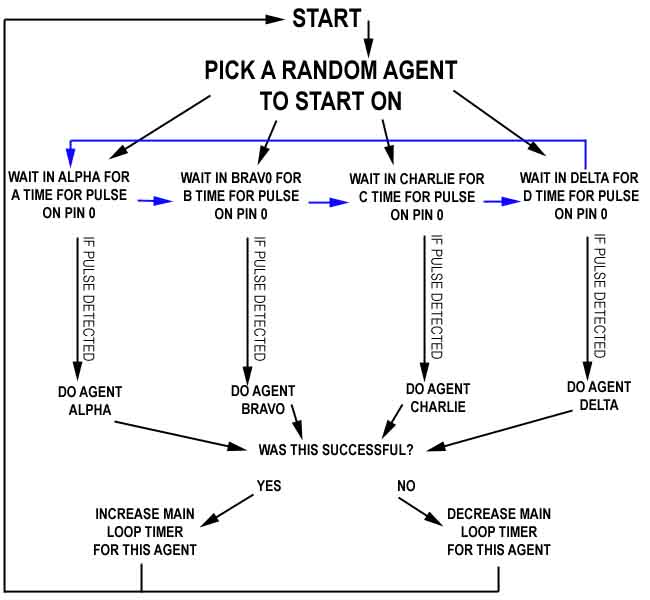

After defining the variables and connections, the program then defines the timer that will initially be spent on each part of the loop. Each part of the loop is named Alpha, Bravo, Charlie, or Delta. Each has a coresponding timer which will be set at the start to 30 loops.

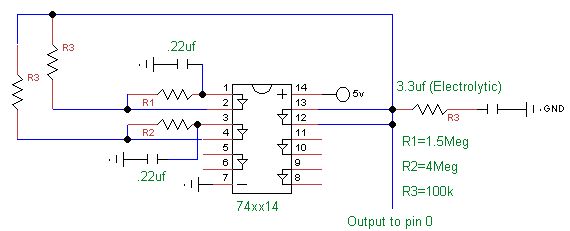

A pseudo-random number is then selected and used to pick which agent to start with. Note that this has nothing to do with the "agent-picker" circuit described earlier.

The program then enters into the main loop. This loop repeats infinitely, cycling through Alpha, Bravo, Charlie and Delta in order. The time spent on each part is governed by the timer variable a, b, c, or d respectively.

Within each part is a line of code that detects a pulse from the agent picker circuit. This pulse will be on pin zero. When a pulse is detected, the program executes the agent/action associated with that particular segment. ie: If a pulse occurs while the program is in the Bravo segment, the program jumps to "Dobravo." If no pulse is detected in a given section, the timer elapses and the progam moves onto the next section. This is performed infinetly if there is no pulse detected.

After an agent is executed, the program evaluates the results of the agent.(Please note that no algorithim has been implemented in the above code to check for success. The Charlie agent was arbitrarily selected for the "successful" agent)

If an agent was successful, the timer for it would be increased by 20 loops, nearly doubling the time that would be spent on that section in the main loop. That means that the probability of it being selected is increased. Instead of being 1 in 4 (ie: 30/120), it is now 5 in 12 (ie: 50/120). If unsuccessful, it's probability is decreased in the same fashion. After this takes place, the program returns to the main loop and continues.

Current Research

My efforts are currently focused around using an Analog Devices 202e 2 axis Acceleromter to detect motion. I am attempting to make the robot learn to move in each direction on command. So far I have been able to get it to learn what is forwards, backwards, left and right. But anything more complex (such as spinning in one place) is beyond it's recognition capabilities right now due to my limited experience with accelerometers.

In the future, I plan to have a fully autonomous learning robot which is capable of surviving, without a battery recharge, for weeks, months, or years on end. This will be accomplished by letting the robot learn what to do when a low battery is detected. It will be equiped with photosensors and solar cells to facilitate the automatic recharging of the batteries. At this stage, it will also be programed to learn how to respond to objects in it's path through infrared sensors and contact sensors. The IR object sensors will be a learned response, while the touch sensors will be preprogramed to prevent the robot from ramming into objects continuously, sort of like a human "reflex arc." In humans, these reflex arcs produce reactions to stimulus before the person even knows what is going on. For example, if you touch something hot with your hand, your nervous system sends signals to motor neurons to move your hand. This all happens before the brain is aware of any pain.

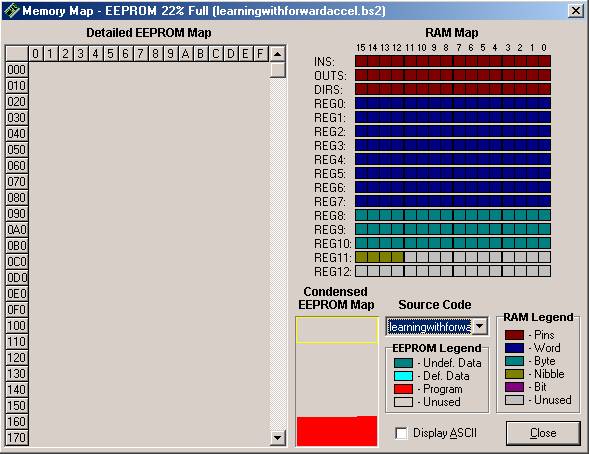

In order to keep the robot from loosing it's memory if power is removed suddenly (ie: it "blacks out" like a human may from a severe blow), the timer variables for each specific action would be saved into EEPROM periodically, say every 10 minutes. EEPROM is the same memory space where the program is stored and is not erased when power is lost. (RAM is erased)

By making use of the EEPROM in this fashion, one could also check up on the robot's progress. This would be done by having a string of "Debug" commands which would output all the variables to the computer screen when connected to a computer via a serial cable. These debug commands would only need to run every few minutes, since the serial cable would rarely be connected and it would be a waste of resources to run the commands constantly.

Current performance leads me to believe that aproxamitely 4 tasks can be learned with the basic stamp 2. This is based solely on the observation that about a little under 1/4 of the memory is taken up by the program to learn just the forward motion. Since the agents could all be the same for motion, and therefore not take up any more memory, we can say that is just one task. The second task would be phototropism. The third would be obstacle avoidance. The 4th would be either a general survival instinct (which I have yet to work out, this may just come from the overal interaction of the other tasks) or it may be a routine to learn what should be done in the event of a low battery. (ie: sit in one place and recharge, look for the brightest light and then recharge, etc) Another thing to work with is the variables. The RAM is much smaller than the EEPROM and is currently at 90% capacity with just one task. This is because the variable used greatly exceed the size they need to be. There size can be reduced from a "word" (16 bits of data) to as small as a byte (8 bits) or a nibble (4bits, I think?)

Memory Map for

Learning Forward Motion

I also have a scheme worked out in my head to use the same main learning loop for every single task. The long term memory for each task would be saved to a variable (and every once in a while to EEPROM)

Behavioural Observations

I have created a version of the learning code in which the accelerometer is used to evaluate the success of an attempt at motion in the forward direction. One of the agents moves both servos so the bot moves forward, while the other agents move the servos in different directions. Within 5 seconds of first moving, the robot learns which agent results in forward motion and repeats it continuously.

See a video of the robot learning to move forward from power on (Quicktime, 4mb)

Click here to download the code for learning forward motion

Nov. 9th, 2002

An interesting bit of emergent behaviour was also noticed. One of the agents coresponds to turning right. This also generates a forward accelertion, just not as great as when the bot is going directly forward. When one of the servos became unplugged, making it impossible to go totally forward, the robot recognized that the right turn agent was at least getting it somewhat forward, and selected that one as the successful agent. The bot spun in circles, but they were circles in a forward direction .

Another experiment I tried was eliminating the agent which explicitly moved both motors at once to make the bot go forward. I then replace that agent with a left turn agent. You will remember that there was already an agent for right turn in the software. The last agent was a backward motion routine. In this experiment, the robot was actually able to learn to try the left and right turn agents one after another which resulted in an overal forward motion! Now that's learning! Remember, there was no command which explicitly made the robot go directly forward.

Nov. 10th, 2002

As I've been experimenting, I've noticed that occasionally the robot will stop moving totally, as if all the loops in the program have gone to zero and all agents have been eliminated. I think this is caused because when the robot goes down hill, "less" acceleration is read from the accelerometer than normal. This comes up as less than the value that would equate to forward motion. As such, the agent is deemed a failure. Adding a loop to recalibrate the accelerometer every few minutes would help this. Another way around the problem, which will probably be implemented regardless, is to restart the learning process when the loops are all equal to zero. This means that if every agent is eliminated, the bot will assume something failed in the software and reset and try it all over again.

Current Limitations

The robot is currently limited in that it must remain tethred to my wall power supply. This is because the servos draw too much power and cause the stamp to brown out when batteries are used. The power supply is much more stable in the respect, so I have opted to use it for my experiments with motion up till now. I plan to move to higher voltage batteries and run the motors through a voltage regulator to solve this problem.

Another problem (easily corrected) is that the robot sometimes falls into an infinite loop. If none of the agents are successful, the program will continuously stay in the main loop since all the agent timers are now at zero. All motion will stop and the robot will apear idle. I have left if this way so that I know when this condition happens. If I wanted to eliminate this problem, it would be simple to add a routine which would reset all the timers to 30 after an "all zero" condition was reached.

Becoming Processor-Less

All of the goals described above, including the robot's current behaviour, should be able to be reproduced in an circuit which does not use a microcontroller. The problem here is the enormous parts count, which is why I opted to use a microcontroller.

Well, that's enough ranting for now! E-mail me with your idea, comments, etc!

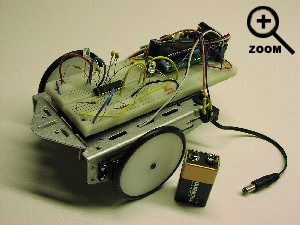

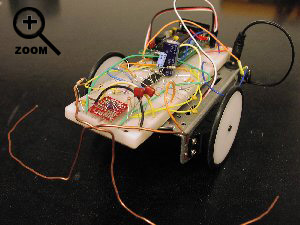

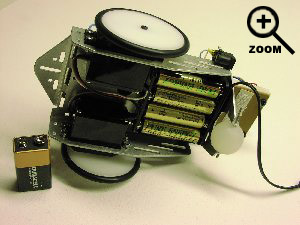

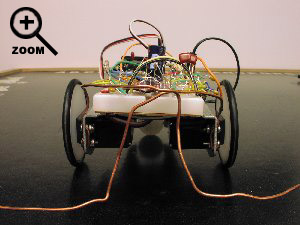

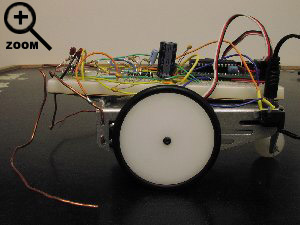

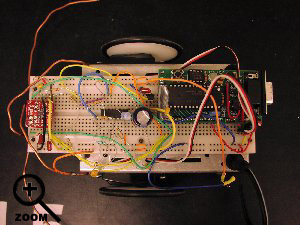

Pictures November 12th